Introducing Databricks Lakebase: A Next-Gen Database Built for the AI Era

In a move that promises to redefine how modern applications interact with data, Databricks has unveiled its latest innovation: Lakebase.

This new offering is poised to address some of the most pressing limitations of traditional databases — challenges that have become especially apparent in the age of AI.

Why Do We Need a New Kind of Database?

Traditional databases, while foundational to computing, were designed in the 1970s — an era with vastly different data demands. Over the decades, while hardware and scale have evolved, database architecture has largely remained stagnant. As a result:

Databases are not built for AI. Most conventional systems are ill-equipped to handle the scale, speed, and variety of modern AI workloads.

Vendor lock-in is a real problem. Once an application is tied to a specific database, it becomes incredibly difficult to migrate away — limiting innovation and flexibility.

High costs. Proprietary database systems come with steep licensing fees and operational overheads.

Migration complexity. Shifting data and workloads to new platforms is often slow, expensive, and risky.

Clearly, a new paradigm is needed — one that embraces open standards, scalability, and AI-first design principles.

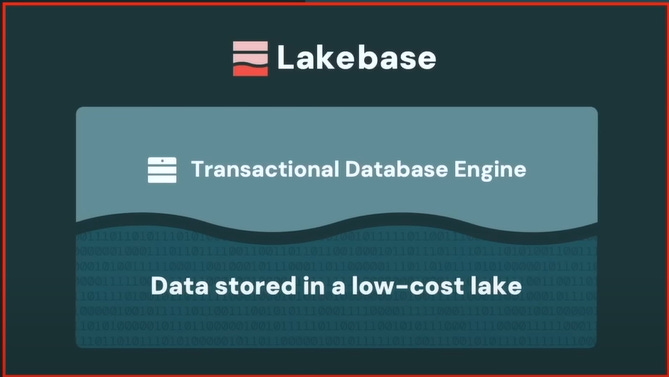

Enter Lakebase: A Unified Compute and Storage Model

Lakebase separates compute from storage, offering flexibility, performance, and cost efficiency. Here’s how it works:

1. Compute: A High-Performance Transaction Engine

The compute is built on open-source PostgreSQL, one of the most trusted and widely adopted database engines. But Databricks has supercharged it:

AI-native design. It launches in under one second, making it ready for real-time use cases and rapid development cycles.

Scalability. Compute resources can be scaled based on workload requirements — ideal for dynamic, data-intensive applications.

Future-ready. It’s designed to be serverless, reducing infrastructure management while improving elasticity.

2. Storage: Open and Affordable Data Lake Integration

Rather than relying on expensive proprietary storage formats, Lakebase uses Databricks’ native data lake infrastructure, which offers:

Low-cost data storage.

Open standards that keep your data accessible and portable.

LakeBase is deeply integrated with the Databricks Lakehouse Platform, most notably through the Unity Catalog. This provides a unified governance model for all data assets, both operational and analytical

The future of data isn’t locked in a box — it’s in the lake. And with Lakebase, Databricks is leading the way.

Why Lakebase Matters

With this, Databricks is breaking down the barriers of traditional database systems. It offers:

Freedom from vendor lock-in, thanks to open formats and standards.

Lower total cost of ownership, by leveraging cheap, scalable cloud data lakes.

A database built for modern applications, especially those driven by AI.

In essence, Lakebase is more than just a new product — it’s a new mindset. One that recognizes that data should be open, fast, scalable, and AI-first. For developers, data teams, and enterprises looking to build the future, Lakebase is a welcome evolution.

“Thank you for your time, and please feel free to suggest any other topic you’d be interested in reading an article about.”

You can show your support!

❤️ Like it

🤝 Send a LinkedIn connection request to stay in touch and discuss ideas.